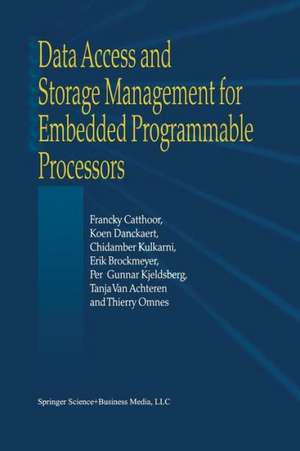

Data Access and Storage Management for Embedded Programmable Processors

Autor Francky Catthoor, K. Danckaert, K.K. Kulkarni, E. Brockmeyer, Per Gunnar Kjeldsberg, T. van Achteren, Thierry Omnesen Limba Engleză Paperback – 2 dec 2010

| Toate formatele și edițiile | Preț | Express |

|---|---|---|

| Paperback (1) | 988.48 lei 6-8 săpt. | |

| Springer Us – 2 dec 2010 | 988.48 lei 6-8 săpt. | |

| Hardback (1) | 997.89 lei 6-8 săpt. | |

| Springer Us – 30 mar 2002 | 997.89 lei 6-8 săpt. |

Preț: 988.48 lei

Preț vechi: 1235.60 lei

-20% Nou

Puncte Express: 1483

Preț estimativ în valută:

189.21€ • 205.59$ • 159.04£

189.21€ • 205.59$ • 159.04£

Carte tipărită la comandă

Livrare economică 21 aprilie-05 mai

Preluare comenzi: 021 569.72.76

Specificații

ISBN-13: 9781441949523

ISBN-10: 1441949526

Pagini: 328

Ilustrații: XIV, 306 p.

Dimensiuni: 155 x 235 x 17 mm

Greutate: 0.46 kg

Ediția:Softcover reprint of hardcover 1st ed. 2002

Editura: Springer Us

Colecția Springer

Locul publicării:New York, NY, United States

ISBN-10: 1441949526

Pagini: 328

Ilustrații: XIV, 306 p.

Dimensiuni: 155 x 235 x 17 mm

Greutate: 0.46 kg

Ediția:Softcover reprint of hardcover 1st ed. 2002

Editura: Springer Us

Colecția Springer

Locul publicării:New York, NY, United States

Public țintă

ResearchDescriere

Data Access and Storage Management for Embedded Programmable Processors gives an overview of the state-of-the-art in system-level data access and storage management for embedded programmable processors. The targeted application domain covers complex embedded real-time multi-media and communication applications. Many of these applications are data-dominated in the sense that their cost related aspects, namely power consumption and footprint are heavily influenced (if not dominated) by the data access and storage aspects. The material is mainly based on research at IMEC in this area in the period 1996-2001. In order to deal with the stringent timing requirements and the data dominated characteristics of this domain, we have adopted a target architecture style that is compatible with modern embedded processors, and we have developed a systematic step-wise methodology to make the exploration and optimization of such applications feasible in a source-to-source precompilation approach.

In a first part of the book, we introduce the context and motivation, followed by a once-over-lightly view of the entire approach, illustrated on a relevant driver from the targeted application domain. In part 2, we show how source-to-source code transformations play a crucial role in the solution of the earlier mentioned data transfer and storage bottleneck in modern processor architectures for multi-media and telecommunication applications. This is especially true for embedded applications where cost issues like memory footprint and power consumption are vital. It is also shown that many of these code transformations can be defined in a platform-independent way. The resulting optimized code behaves better on any of the modern platforms. The steps include global data-flow and loop transformations, data reuse decisions, high-level estimators and the link with parallelisation and multi-processor partitioning. In part 3 we discuss our research efforts relating to the mapping of embedded applications to specific memory organisations in embedded programmable processors. In a traditional processor-based environment, compilers perform memory optimizations assuming a fully fixed hardware target architecture with only maximal performance in mind. However, in an embedded context also cost issues and especially power consumption and memory footprint play a dominant role too. Usually the timing requirements are given and the application designer is mostly interested in the trade-off between timing characteristics of the different application tasks and their cost effects. For this purpose Pareto type trade-off curves are the most suitable vehicle to address this design problem. The steps involved here include the storage cycle budget distribution, support of modern memory architectures like SDRAMs, and cache related issues.

In a first part of the book, we introduce the context and motivation, followed by a once-over-lightly view of the entire approach, illustrated on a relevant driver from the targeted application domain. In part 2, we show how source-to-source code transformations play a crucial role in the solution of the earlier mentioned data transfer and storage bottleneck in modern processor architectures for multi-media and telecommunication applications. This is especially true for embedded applications where cost issues like memory footprint and power consumption are vital. It is also shown that many of these code transformations can be defined in a platform-independent way. The resulting optimized code behaves better on any of the modern platforms. The steps include global data-flow and loop transformations, data reuse decisions, high-level estimators and the link with parallelisation and multi-processor partitioning. In part 3 we discuss our research efforts relating to the mapping of embedded applications to specific memory organisations in embedded programmable processors. In a traditional processor-based environment, compilers perform memory optimizations assuming a fully fixed hardware target architecture with only maximal performance in mind. However, in an embedded context also cost issues and especially power consumption and memory footprint play a dominant role too. Usually the timing requirements are given and the application designer is mostly interested in the trade-off between timing characteristics of the different application tasks and their cost effects. For this purpose Pareto type trade-off curves are the most suitable vehicle to address this design problem. The steps involved here include the storage cycle budget distribution, support of modern memory architectures like SDRAMs, and cache related issues.

Cuprins

1. DTSE in Programmable Architectures. 2. Related Compiler Work on Data Tranfer and Storage Management. 3. Global Loop Transformations. 4. System-Level Storage Requirements Estimation. 5. Automated Data Reuse Exploration Techniques. 6. Storage Cycle Budget Distribution. 7. Cache Optimization. 8. Demonstrator Designs. 9. Conclusions and Future Work. References. Bibliography. Index.